Event Recap: The Future of AI Agents: Security, Speed, and Scaling 📈

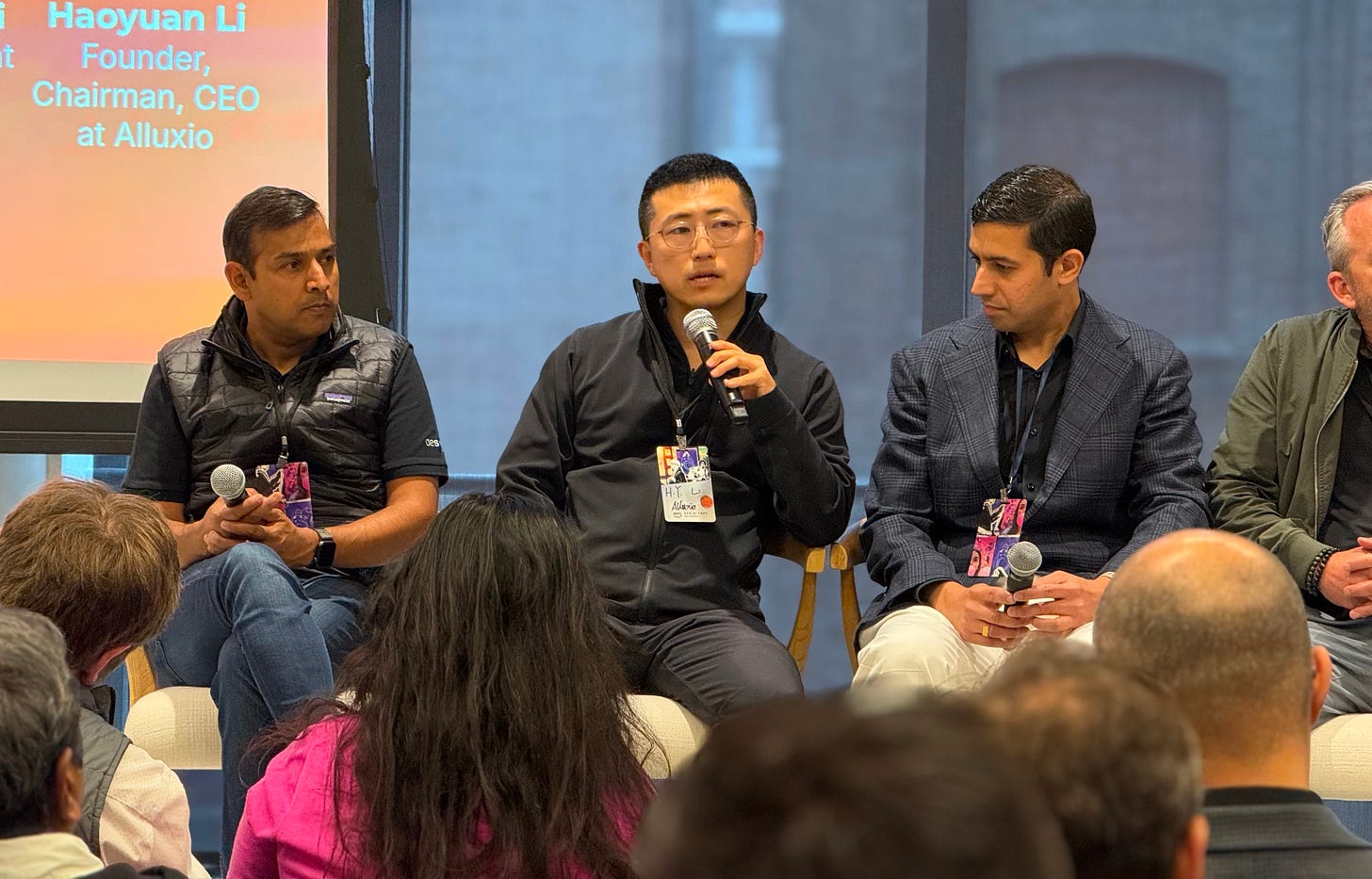

On Jul 30, we brought together founders, builders, and investors for an unfiltered deep dive into one of the most exciting—and chaotic—frontiers in tech: AI agents and Infra. From infrastructure bottlenecks to security nightmares, venture trends to go-to-market wins, our panel didn’t hold back.

The conversation spanned hard-won scaling lessons, the thorny realities of securing agent-to-agent workflows, and why the winners of the AI agent wave might look nothing like the platforms we use today.

🤝 Shout out to our partner Revela, Descope and AWS for helping make this event possible!

Revela is AI-first dev shop partnering with Seed & Series A startups in SF to build product and infra. Helps accelerate roadmaps, cut inference costs, and scale to the next milestone. Book a free 1:1 with our CEO Mike here to see how we can help your team push more code and scale your AI infra. Follow our LinkedIn. Learn More

Descope is a no/low-code platform for managing identity across customers, partners, and AI agents. Developers use Descope to secure APIs, MCP servers, and agents with authentication, authorization, consent, and tokens. Follow on LinkedIn or X for ongoing dev updates. Learn how Descope enables agentic identity. Visit our AI demo microsite

Featured Speakers

Brian Quinn, President & GM, North America at AppsFlyer; Managing Director Americas at App Annie; GVP at Kenshoo; Director at Experian, AT&T & Cisco

Rishi Bhargava, Co-founder of Descope ($53M raised), ex-Co-Founder of Palo Alto Networks, ex-GM & VP Software at Intel, ex-VP PM at McAfee

Suraj Patel, VP Ventures & Corporate Development at MongoDB; ex-Principal at Bow Capital

Siddharth Bhai, Product Leader at Databricks, ex-Product Director at Splunk; Lead PM at Google; ex-Principal PM at Microsoft

Haoyuan Li, Founder, Chairman, CEO at Alluxio (Series C+), Adjunct Professor at Peking University

💡 TL;DR – Key Highlights

MCP is exploding—but security is lagging: Tens of thousands of servers deployed, but few are production-ready.

AI agent autonomy will demand new access control models: The “cruise control to full self-driving” shift is coming faster than expected.

Infra bottlenecks are real—and solvable: Latency in cloud storage can cripple inference; caching and data access optimization are huge levers.

Go-to-market still decides who wins: Proprietary data, deep domain expertise, and clear ROI beat “just another agent” every time.

⚡ MCP Mania: Growth Outpacing Security

Anthropic’s MCP protocol only launched in late 2024—yet by early 2025, there were tens of thousands of MCP servers in the wild. That hockey-stick adoption curve is exciting, but as Rishi Bhargava pointed out, “The vast majority are local developer deployments. Production-ready remote servers? You can count them on two hands.”

The gap between experimentation and enterprise readiness is wide. Early MCP specs didn’t even define authentication, making them unsuitable for exposing sensitive APIs. April’s update added OAuth flows and a clearer split between authorization servers and resource servers, but adoption of those practices is still slow.

Suraj Patel added that in many industries—like automotive, healthcare, and logistics—core SaaS providers aren’t building MCP servers at all. This creates opportunities for startups to be the integration layer, connecting “closed” software to the agentic world. But that opportunity is fragile: incumbents can close the gap quickly if they decide to launch first-party agents.

Brian Quinn warned of mismatched expectations: “C-levels believe they’ll get instant access to insights just by turning on MCP. The reality is, it takes iteration to get useful, accurate, and safe results.”

🔐 Rethinking Access Control for AI Agents

Traditional permission models assume a human is at the keyboard. In an agentic world, that assumption fails.

Rishi framed the shift as the same journey autonomous vehicles took:

Phase 1: Agents act only with tightly scoped, read-only permissions (the “cruise control” stage).

Phase 2: Incremental expansion into write actions and system integrations (lane keeping, assisted steering).

Phase 3: Full autonomy—agents can perform end-to-end workflows—but only with robust monitoring and fail-safes (full self-driving).

Siddharth Bhai described two dominant models emerging:

Run-as-owner – The agent inherits all the permissions of the invoking user.

Run-as-viewer – The agent can only access resources the viewer is explicitly authorized for.

The “viewer” model reduces risk but is harder to implement—it requires unified governance across all data sources and AI endpoints. Many teams still default to “run-as-owner” for speed, creating future security debt. As Siddharth put it, “What’s expedient isn’t always what’s right—but market pressures mean both models will be in play for years.”

🛡️ Data Governance as a Competitive Advantage

When agents start pulling customer data, the compliance stakes escalate fast. Brian Quinn described how AppsFlyer built its Privacy Cloud, a secure collaboration layer for marketing analytics. The approach allows advertisers to run AI models over campaign data without directly sharing sensitive identifiers.

In advertising, AI thrives on large, granular datasets—but privacy laws demand minimization. “It’s a paradox,” Brian said. “You need more data for better models, but the law says use less.”

Suraj noted that MongoDB customers are increasingly designing governance into their AI pipelines from day one—structuring metadata, implementing role-based access, and separating inference data from training data. Databricks is taking a similar approach with its governance framework, ensuring that policies apply equally to raw datasets, embeddings, and model endpoints.

The emerging consensus: governance isn’t just compliance—it’s a differentiator when selling into regulated industries.

⚙️ The Hidden Bottleneck: Cloud Storage Latency

Infrastructure talk got unusually animated when Haoyuan Li shared that some AI teams are running $50M GPU clusters at 30% utilization simply because their data layer can’t feed the compute fast enough.

The common culprit: public cloud object storage. While S3 and GCS are cost-effective for massive datasets, they’re too slow for inference pipelines that require rapid, repeated access. “It’s not unusual to see 10–30 seconds just to list a folder of files,” Haoyuan explained.

The fix is surprisingly straightforward: caching layers that sit between compute and storage. Salesforce, for example, saw nearly 1000× improvement in certain inference queries after deploying Alluxio’s caching layer. That kind of boost doesn’t just save money—it enables product features that were previously impossible due to latency.

For founders, the lesson is clear: infra optimization isn’t just for later—it can be the difference between a functional MVP and a non-starter.

🎥 Multimodal Is Here—And It’s Messy

The industry has moved from single-modality (text-only) to multimodal (text, image, video, audio) faster than many infra teams expected. This introduces complex challenges in storage, search, and retrieval.

Suraj emphasized the central role of metadata—the descriptive layer that makes it possible to find the right asset without searching the entire dataset. Without rich metadata, embedding search breaks down, especially when mixing modalities.

Haoyuan noted that the multimodal journey mirrors the broader AI lifecycle:

Train or fine-tune the right model quickly.

Deploy to production and scale inference.

Avoid letting the data layer become the bottleneck.

Siddharth shared a tangible example: five years ago, real-time language translation was painfully slow and inaccurate. Today, multimodal translation apps can handle live conversation, switching seamlessly between audio, text, and video. “It’s a leapfrog moment—if the UX is right, adoption can go exponential almost overnight.”

💸 Where the AI Infra Money Is Flowing

Suraj walked through the venture funding timeline:

Late 2022–Early 2023: Massive checks for model hosting, vector databases, and frameworks (LangChain, LlamaIndex).

Mid–Late 2023: Shift to application-layer AI and infra that improves inference performance (e.g., Fireworks).

2024–2025: Growing demand for durable execution (handling long-running, multi-step tasks), identity/auth solutions for agents, and evaluation tooling.

Why the shift? Two reasons:

Many “core infra” plays have been commoditized by hyperscalers.

Application adoption patterns are changing too fast for single-point infra bets without deep defensibility.

Rishi added that security and identity in the agentic world are still “unsolved and unavoidable”—a clear area for future investment.

🚨 Security Threats in the Age of Open Agents

Letting agents connect to each other or your internal systems introduces a fundamentally different risk profile.

Rishi warned that “all the old models—firewalls, DLP, user-based permissions—were built for human workflows. They don’t map to agents that can chain actions across systems.”

Recent incidents, like an autonomous agent deleting a production database, underline the need for agent-aware security tooling—from permission models to anomaly detection tuned for non-deterministic behavior.

Siddharth likened it to onboarding a new human hire: “You give them one task, watch how they do it, then slowly increase responsibility. Agents need the same staged trust model—with the ability to instantly roll back actions.”

📈 Go-To-Market: The Real Differentiator

With hundreds of agent startups launching, Suraj was blunt: “If you don’t have proprietary data, deep workflow expertise, or a measurable ROI, you’re going to be competing with ChatGPT plug-ins for free.”

Brian illustrated what good GTM looks like: AppsFlyer’s creative analytics tool uses computer vision to analyze ad videos, identify the elements that drive conversions, and feed those insights back into creative production. Customers in gaming, e-commerce, and fintech have seen dramatic drops in customer acquisition costs.

Siddharth connected the dots back to his Google Ads days, where aligning sales efforts to customer KPIs unlocked both adoption and upsell. “You’re not selling AI—you’re selling a business outcome. The AI is just how you get there.”

Final Takeaway

The panel’s advice to founders was consistent:

Build for trust: Agent security, permissions, and governance are not optional.

Optimize infra early: Bottlenecks at scale are expensive and morale-killing.

Anchor on ROI: Whether it’s time saved, revenue gained, or cost reduced, show it fast.

A huge thank you to everyone who joined us for this deep, candid conversation. If you missed it, we’d love to see you at a future EntreConnect event—whether you’re building agents, investing in infra, or just curious about where AI is headed.

Let’s keep learning, building, and pushing the boundaries of what’s possible. 🚀

👉 Follow us on LinkedIn and Luma for future event invites and exclusive takeaways

🗓️ Join us at our next gathering—we’d love to see you there!

🎤 Interested in speaking at a future event? Reach out—we’re always looking for inspiring founders and industry leaders to share their stories & insights!

💬 LinkedIn Challenge: Share, Learn, Connect

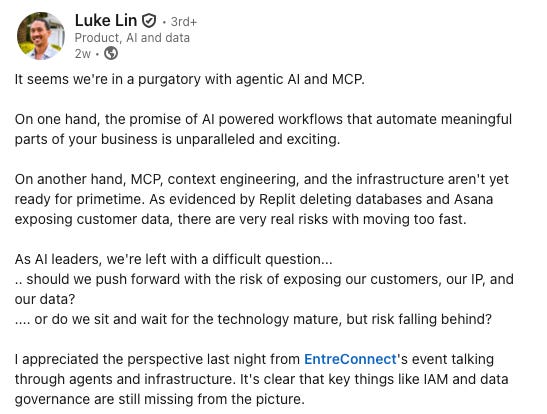

Thank you to everyone who participated in our LinkedIn Challenge! We're thrilled to feature the most engaging and inspiring post (link here), giving our community a chance to celebrate and learn from the experience. We also truly appreciate everyone who shared their best moments and insights with us!

🗓️ Upcoming Events

Aug 24, San Jose, CA | Startup Pitch Salon | Investor Feedback + Audience Vote. Calling for startup founders! We are excited to co-host a highly curated startup pitch event with WeShine, bringing together top early-stage founders, seasoned investors, and engaged audiences for an event of insight, feedback, and community.